In my previous blog post I described the IO costing for sort operation. It turned out that the calculation functions are central and used in other scenarios as well, like full table scan (FTS); as such, they are definitely worth giving it a second look.

So I did a similar low-level analysis for FTS and deduced more generic formulae.

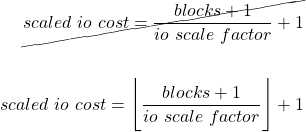

Expectedly, scaled IO cost depends on the number of blocks:

(1)

Both +1 are really just the safeguards against multiplication/division by zero, which would lead the whole cost calculation astray. But, as normally, blocks >> 1 and cost >>1, the equation 1 can be approximated as follows:

(2) ![]()

where

(3) ![]()

In the equation above, we can recognize the single-block read time (SREADTIM) in the numerator and multi-block read time (MREADTIM) in the denominator.

(4) ![]()

(5) ![]()

Consequently, io scale factor can be expressed by the means of SREAD and MREADTIM:

(6) ![]()

Everything gets perfectly clear after combining the equations 2 and 6, i.e. expressing also scaled io cost as a function of SREADTIME and MREADTIM:

(7) ![]()

That’s a well known formula documented in [Lewis 2006]. Simply put, the cost calculated in the equation 7 is the total multi-block read time divided by a single-block read time. Or, in other words, to paraphrase Jonathan Lewis: “the cost is total predicted time, expressed in units of the single-block read time”.

After having sorted out the general formula, I revisited the IO sort cost calculation. Predictably, the io scale factor calculation is just a variant of the general calculation with a special value for MBRC:

(8) ![]()

This means that the optimizer always foresees 64k IOs for sort. Consequently, the MBRC is simply the number of blocks that fit in 64k.

For FTS, the total IO cost equals scaled io cost.

(9) ![]()

But for sort, the total IO cost contains other components as well:

(10) ![]()

Multiplying scaled IO cost by two might mean that the data has to be read twice.

But what’s the rationale behind adding blocks? I don’t know. Both summands are expressed in different units anyway. I mean scaled io cost is measured in “single-block read time”, and blocks don’t have any units at all. And precisely this oddity is the reason why the sort cost significantly changes with the block size.

Related blog post:

References:

[Lewis 2006] Jonathan Lewis. (2006). Cost-Based Oracle Fundamentals

Updates on October 8, 2020

floor on the fraction was missing in the formula for io_scale_factor. This became apparent when I tried to apply it to Jonathan Lewis’ model for investigating an index FFS cost anomaly.